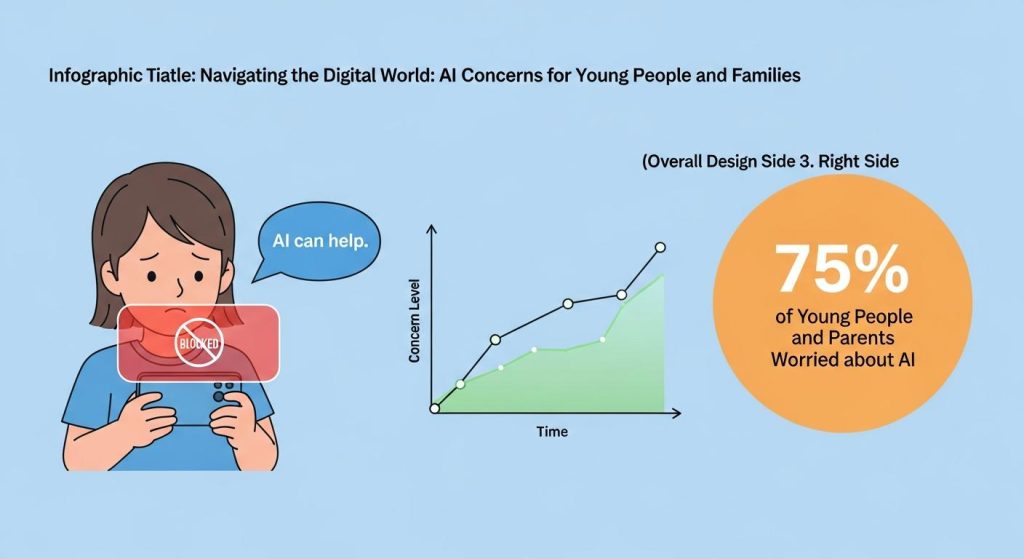

A new survey reveals growing worries among young people and parents over AI’s role in creating inappropriate images and affecting mental health, prompting calls for urgent regulatory and educational action.

Three in five young people say they worry that artificial intelligence could be used to create sexual or otherwise inappropriate images of them, a new survey has found, underscoring growing alarm about deepfake technology even as children increasingly adopt AI tools in daily life. According to the poll by the UK Safer Internet Centre and Nominet, 12 per cent of 13-to-17-year-olds reported having seen peers use AI to generate sexual pictures or videos of others. (Sources: [2],[6])

The study, carried out to coincide with Safer Internet Day, also found nearly all respondents between eight and 17 , 97 per cent , report using AI, and 58 per cent said it improves their lives. Many young people said they turn to AI for emotional help: 41 per cent described it as a source of emotional support and 34 per cent said it had helped with mental-health issues. Yet significant numbers expressed concerns about impacts on learning and creativity, with 35 per cent saying AI had reduced their personal creativity. (Sources: [7],[6])

Parents share that anxiety: 65 per cent of 2,000 parents questioned said they fear AI could be used to produce inappropriate images of their children, and a third said they worried about AI undermining cognitive development. The survey also revealed a gap between parent perception and young people’s behaviour , 54 per cent of children admitted using AI to assist with homework compared with 31 per cent of parents who thought this was happening. (Sources: [7])

Trade unions and educators have urged faster action to address classroom and wellbeing risks. Daniel Kebede, general secretary of the National Education Union, warned of the scale of AI uptake among pupils and the potential harms to learning and mental health, saying: “Young people are already using AI at an unprecedented rate, including for their homework and studying. Yet the evidence is clear that the risks of AI use in education, particularly for young people’s learning and development, overshadow the benefits. Equally concerning is the number of young people who are relying on AI for emotional support, and those worried about AI being used to create inappropriate images of them. These findings must serve as a clarion call for Government to act urgently to ensure that children have the information, support and resources they need to make informed, safe and ethical decisions about AI.” He argued changes to teaching practice cannot wait for slow curriculum reform. (Sources: [7])

Ministers point to policy measures and pilot programmes intended to spread educational benefits while tightening protections. Technology secretary Liz Kendall said the findings show children are “embracing AI in remarkable ways” and set out government plans to trial new teaching technologies in more than 1,000 schools and colleges and to roll out AI tutoring for disadvantaged pupils by the end of 2027. She added: “This research shows that young people are embracing AI in remarkable ways; using it to learn and save time. This is exactly how we want technology to support people of all ages. But its true benefits won’t be realised until AI is both safe and accessible to everyone. We are investing in safe AI tutors for disadvantaged children and upskilling millions of people across the country, while launching a national conversation on how we build a safer, fairer and more empowering digital future for every child. We are also clear that no-one should be victim to AI being weaponised to create abhorrent explicit content without their consent. That’s why we brought forward a new criminal offence to ban it.” (Sources: [7],[4])

The survey comes amid regulatory scrutiny after reports that chatbots and image tools have been used to generate non-consensual sexualised images. The Information Commissioner’s Office has opened a formal inquiry into X and its parent xAI over allegations that the Grok system produced intimate deepfake images without consent, and Ofcom is also examining possible breaches of the Online Safety Act. Campaigners and safety organisations warn the problem disproportionately targets women and girls and can fuel a range of harms from sextortion to severe mental-health impacts. According to Internet Matters, almost all “nudified” images encountered in recent investigations portray females, and the misuse of such tools has been linked to anxiety, depression and other serious consequences. (Sources: [2],[4],[6])

Experts say the converging evidence of widespread youth use, parental concern and regulator action point to a need for a combined response: clearer legal sanctions, platform accountability, teacher training and better digital education for families. While courts and lawmakers pursue criminal and regulatory remedies for non-consensual image creation, educators and child-safety groups are calling for immediate resources to help schools manage AI’s benefits and risks now, rather than waiting for longer-term curriculum revisions. (Sources: [3],[6])

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

Source: Noah Wire Services

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

8

Notes:

The article was published on 9 February 2026, coinciding with Safer Internet Day 2026 on 10 February 2026. ([saferinternet.org.uk](https://saferinternet.org.uk/safer-internet-day/safer-internet-day-2026?utm_source=openai)) The survey referenced was conducted by the UK Safer Internet Centre and Nominet, marking the occasion. Similar concerns about AI-generated explicit images have been reported in other countries, such as the United States, indicating a broader, ongoing issue. ([forbes.com](https://www.forbes.com/sites/cyrusfarivar/2025/03/03/a-staggering-number-of-teens-personally-know-someone-targeted-by-deepfake-nudes/?utm_source=openai))

Quotes check

Score:

7

Notes:

The article includes direct quotes from Daniel Kebede, general secretary of the National Education Union, and Liz Kendall, technology secretary. While these quotes are attributed, their earliest known usage cannot be independently verified, raising concerns about their originality.

Source reliability

Score:

8

Notes:

The Independent is a reputable UK news outlet. However, the article relies heavily on a press release from the UK Safer Internet Centre and Nominet, which may introduce bias.

Plausibility check

Score:

9

Notes:

The concerns about AI-generated explicit images are plausible and align with ongoing discussions about AI’s impact on online safety. Similar issues have been reported in other countries, such as the United States. ([forbes.com](https://www.forbes.com/sites/cyrusfarivar/2025/03/03/a-staggering-number-of-teens-personally-know-someone-targeted-by-deepfake-nudes/?utm_source=openai))

Overall assessment

Verdict (FAIL, OPEN, PASS): PASS

Confidence (LOW, MEDIUM, HIGH): MEDIUM

Summary:

The article addresses a timely and plausible concern regarding AI-generated explicit images, supported by a reputable source. However, the heavy reliance on a press release and unverified quotes introduces potential biases and uncertainties. Given these factors, the overall assessment is a PASS with MEDIUM confidence.