**Rochester**: Researchers, led by Associate Professor Edmund Lalor, investigate how the brain combines visual and auditory cues in speech for cochlear implant users. The study, funded by the NIH, seeks to enhance hearing technologies by understanding multisensory speech processing in noisy environments.

Researchers are embarking on a groundbreaking journey to enhance understanding of how the brain integrates visual speech cues with auditory signals, particularly focusing on individuals with cochlear implants. This ambitious study seeks to gain insights into multisensory speech processing within noisy environments, with implications for advancements in hearing technologies.

The research is spearheaded by Edmund Lalor, an associate professor of biomedical engineering and neuroscience at the University of Rochester, who highlights the complexity of the integration process. “Your visual cortex is at the back of your brain and your auditory cortex is on the temporal lobes,” Lalor explained in remarks to “The Hearing Review.” He further noted, “How that information merges together in the brain is not very well understood.”

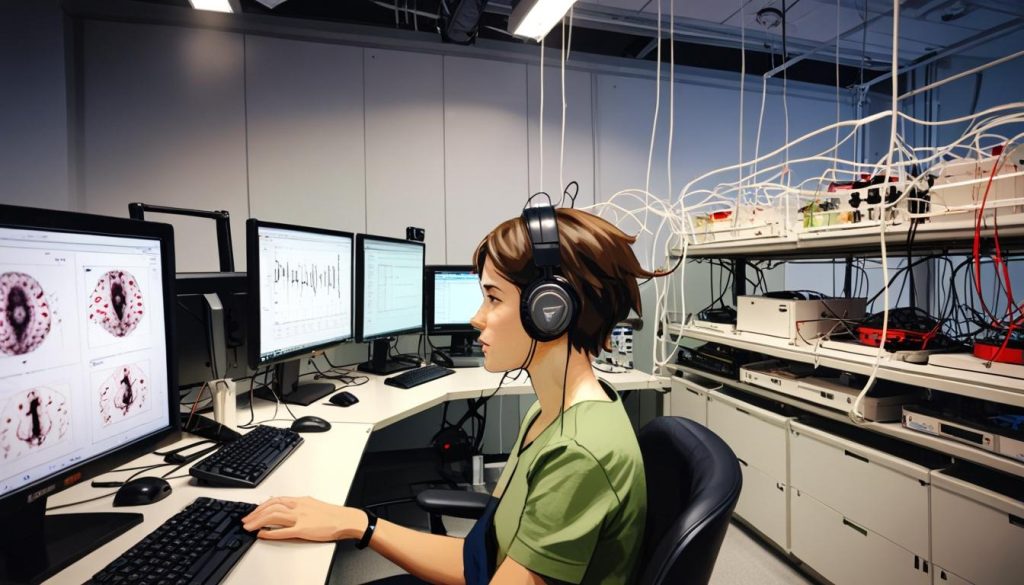

To investigate this integration, scientists have employed noninvasive electroencephalography (EEG) as a means to study responses to basic auditory stimuli. Lalor’s team has observed patterns in how the specific shapes made by the mouth, such as during pronunciation, impact auditory comprehension. They aim to build on this foundation by exploring spoken language in more natural, continuous speech settings.

The National Institutes of Health (NIH) is supporting this research with an estimated $2.3 million in funding over the next five years, following a previous grant that originally initiated the project with seed funding from the University’s Del Monte Institute for Neuroscience.

A key aspect of the study involves monitoring the brainwaves of individuals using cochlear implants—those for whom the auditory environment can be particularly challenging. The team plans to recruit 250 participants with cochlear implants, who will engage with multisensory speech while their brain responses are recorded through EEG caps.

Lalor elaborated on the significance of timing for cochlear implant procedures. “The big idea is that if people get cochlear implants implanted at age one, while maybe they’ve missed out on a year of auditory input, perhaps their audio system will still wire up in a way that is fairly similar to a hearing individual,” he stated. In contrast, he suggests that individuals receiving implants later might develop different reliance on visual cues due to missing critical periods of auditory system development.

In collaboration with co-principal investigator Professor Matthew Dye—who leads the doctoral programme in cognitive science at the Rochester Institute of Technology and directs the National Technical Institute for the Deaf Sensory, Perceptual, and Cognitive Ecology Center—the team faces various challenges in EEG data collection. The presence of electrical activity from cochlear implants poses additional complications in analysing EEG readings.

“It will require some heavy lifting on the engineering side, but we have great students here at Rochester who can help us use signal processing, engineering analysis, and computational modeling to examine these data in a different way that makes it feasible for us to use,” Lalor remarked.

Ultimately, this research aims to deepen the understanding of how audiovisual information is processed in the brain, with the long-term objective of developing improved technologies that cater specifically to the needs of individuals who are deaf or hard of hearing.

Source: Noah Wire Services