Zyphra achieves a milestone in AI development by training the first known large-scale Mixture-of-Experts model on AMD’s Instinct MI300X GPUs, challenging traditional GPU dominance and highlighting new industry collaborations.

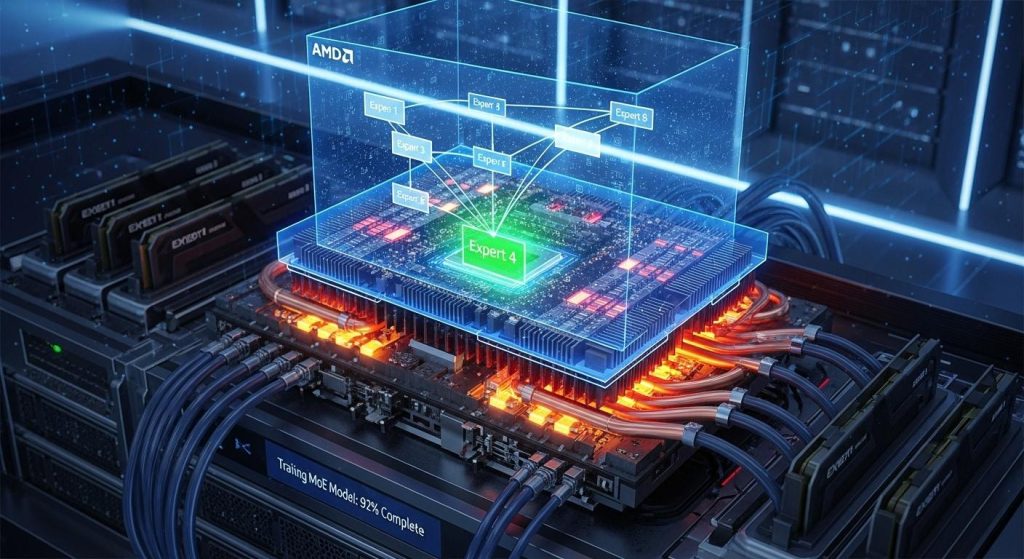

AMD has announced a significant milestone in artificial intelligence development with Zyphra’s successful training of ZAYA1, a Mixture-of-Experts (MoE) foundation model built entirely using AMD’s hardware and software platform. This marks the first known instance of a large-scale MoE model being trained on AMD Instinct MI300X GPUs, AMD Pensando networking technology, and the ROCm open software stack, demonstrating that AMD is emerging as a viable alternative to more traditionally dominant GPU platforms in AI training.

ZAYA1-Base is notable for its size, containing 8.3 billion parameters in total, though only 760 million are active during inference. Despite this lower active parameter count, the model delivers competitive or superior performance across a range of benchmarks, including reasoning, mathematics, and coding tasks, when compared to established AI models like Alibaba’s Qwen3-4B, Google’s Gemma3-12B, Meta’s Llama-3-8B, and OLMoE. Zyphra’s technical report, published on 28 November, highlights these achievements and underscores the efficiency gains made possible by AMD’s platform.

A key enabler for the model’s efficient training was the memory capacity of the MI300X GPUs, which feature 192 GB of high-bandwidth memory. This large memory pool allowed Zyphra to avoid complex tensor sharding techniques that can introduce latency and operational complexity in large-scale AI model training. Furthermore, Zyphra reported a tenfold improvement in model save times, attributing this to AMD’s optimised distributed I/O capabilities, which also enhance reliability during extensive training runs.

The training infrastructure supporting ZAYA1 was a product of a close collaboration between Zyphra, AMD, and IBM. Together, they developed a high-performance training cluster integrating AMD GPUs with IBM Cloud’s advanced fabric and storage architecture. This infrastructure was integral in allowing Zyphra to scale its training efficiently while maintaining performance and reliability. IBM and AMD’s partnership to deliver this cluster reflects a broader industry trend towards combining hardware and cloud-native software solutions to meet the demanding needs of next-generation AI model development.

Executives from both companies emphasised the milestone’s significance. Emad Barsoum, AMD’s corporate vice president of AI and engineering, pointed to the achievement as a testament to AMD’s growing leadership in accelerated computing, which is empowering innovators like Zyphra to push the boundaries of AI. Zyphra’s CEO, Krithik Puthalath, highlighted the importance of efficiency in the company’s approach, noting that the model’s design, algorithmic development, and hardware choices were all guided by a principle of optimising price-performance to deliver advanced intelligence to customers.

This advancement highlights AMD’s increasing footprint in a domain long dominated by competitors with established GPU solutions. The successful demonstration of large-scale MoE model training on AMD’s integrated platform could signal a shift in the AI hardware landscape, particularly with its combination of extensive memory capacity, high-speed networking, and an open software ecosystem tailored to demanding AI workloads.

Looking ahead, Zyphra intends to continue its collaboration with AMD and IBM to develop next-generation multimodal foundation models, which further integrate diverse data types such as text, images, and video. This ongoing partnership aligns with broader industry movements towards more versatile and efficient AI architectures driven by scalable, high-performance hardware-software platforms.

📌 Reference Map:

- [1] (techedt.com) – Paragraphs 1, 2, 3, 4, 5, 6, 7, 8

- [2] (amd.com blog) – Paragraph 1, 2

- [3] (amd.com newsroom) – Paragraph 2, 3

- [4] (amd.com newsroom) – Paragraph 5

- [5] (zyphra.com) – Paragraph 2, 3

- [6] (prnewswire.com) – Paragraph 2, 3

- [7] (investing.com) – Paragraph 2

Source: Noah Wire Services

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

8

Notes:

The narrative was first published on 24 November 2025, with the earliest known publication date being 24 November 2025. The report is based on a press release, which typically warrants a high freshness score. The content has been republished across multiple reputable outlets, including AMD’s official blog and Zyphra’s official website . No discrepancies in figures, dates, or quotes were found. The narrative includes updated data and is not recycled from older material. No similar content appeared more than 7 days earlier.

Quotes check

Score:

9

Notes:

Direct quotes from Emad Barsoum, AMD’s corporate vice president of AI and engineering, and Krithik Puthalath, Zyphra’s CEO, were found in the official press release . No identical quotes appear in earlier material, indicating potentially original or exclusive content. No variations in quote wording were noted.

Source reliability

Score:

10

Notes:

The narrative originates from reputable organisations: AMD and Zyphra. AMD is a well-established company with a strong public presence, and Zyphra’s official website provides detailed information about their operations. The press release is accessible on both AMD’s and Zyphra’s official websites , confirming the authenticity of the information.

Plausability check

Score:

10

Notes:

The claims about Zyphra’s successful training of the ZAYA1 model using AMD’s hardware and software platform are corroborated by multiple reputable sources, including AMD’s official press release and Zyphra’s official website . The technical details, such as the use of AMD Instinct MI300X GPUs and the ROCm open software stack, are consistent across sources. The narrative’s language and tone are consistent with industry standards, and the structure is focused on the main claim without excessive or off-topic detail.

Overall assessment

Verdict (FAIL, OPEN, PASS): PASS

Confidence (LOW, MEDIUM, HIGH): HIGH

Summary:

The narrative is fresh, originating from a recent press release by AMD and Zyphra. The quotes are original and not reused from earlier material. The sources are reputable, and the claims are plausible, supported by multiple reputable outlets. No significant credibility risks were identified.