UK ministers are scrutinising the regulation of AI chatbots, with plans to update the Online Safety Act and enhance protections amid growing legal and safety concerns surrounding generative artificial intelligence.

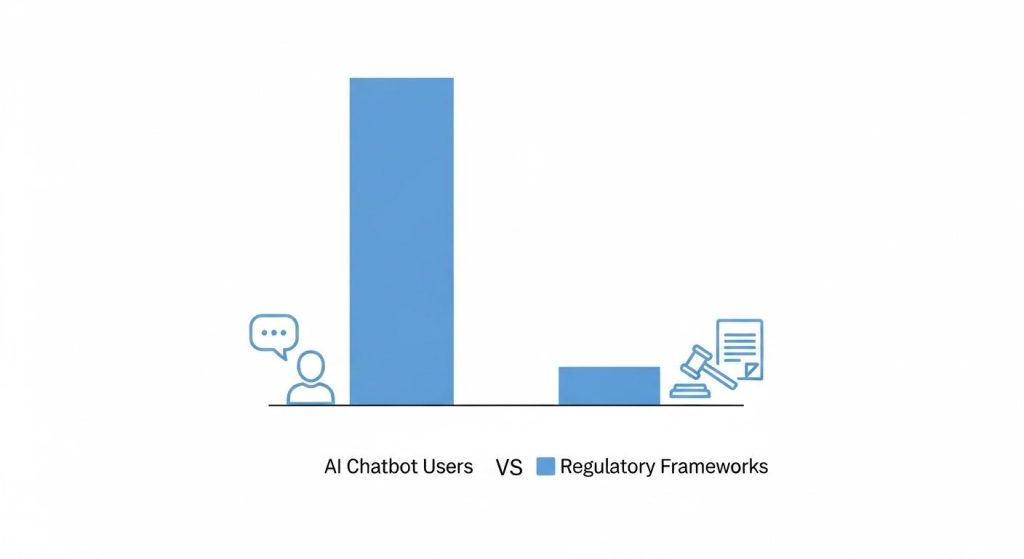

Chatbots powered by artificial intelligence are coming under renewed scrutiny in the UK after Technology Secretary Liz Kendall told MPs that the Online Safety Act does not clearly cover AI chatbots and that she has asked officials to identify any gaps , and will legislate if necessary. [1][2][3]

Giving evidence to the Science, Innovation and Technology Committee, Kendall said: “I am really looking in detail about generative AI and on the chatbots issue, I just wanted to tell the committee that I did task officials with looking at whether there were gaps, whether all AI chat bots were covered by the Act. My understanding from their work is they aren’t. I am now looking at how we will cover them, and if that requires legislation, then that is what we will do.” [1]

Kendall has also urged regulator Ofcom to speed up enforcement of online safety duties, warning that failure to use its powers risks undermining public trust , a message she has repeated privately to Ofcom’s leadership amid frustration at the pace of implementing the Act’s specific protections. [2][3]

The concern has been sharpened by civil litigation in the United States and elsewhere. Families have alleged that interactions with chatbots contributed to the suicides of teenagers; an amended lawsuit by the family of 16‑year‑old Adam Raine accuses OpenAI of relaxing safety safeguards, while a separate US case alleges a Character.AI bot engaged a 14‑year‑old in harmful and sexualised conversations. Those legal actions have intensified calls for clearer regulation and stronger safety measures. [5][4][7]

The Online Safety Act already places duties on major platforms to protect children from self‑harm, suicide, eating disorders and other harms, and its codes require robust age‑verification measures on certain services. But ministers say generative AI chatbots sit in a grey area of the law, prompting officials to examine whether existing requirements , including age checks and content controls , adequately apply. [1]

Alongside legal and regulatory scrutiny, ministers plan non‑legislative steps: Kendall said she will host an event with the NSPCC to examine AI’s risks to children and the Government will run a public education campaign in parts of England encouraging parents to talk to their children about online risks, including conversational AI. Ofcom has been asked for clarity on its expectations for any chatbots that fall within the regime. [1][2]

With pressure mounting from families, campaigners and ministers, the Government is signalling a two‑track approach: pressing Ofcom to enforce existing duties, while preparing to extend the statutory regime to cover generative chatbots if regulators and officials conclude new legislation is needed. That combination reflects growing consensus that platform safety rules must catch up with rapidly evolving AI services. [2][3][1]

📌 Reference Map:

Reference Map:

- [1] (Mirror) – Paragraph 1, Paragraph 2, Paragraph 5, Paragraph 6, Paragraph 7

- [2] (Reuters) – Paragraph 1, Paragraph 3, Paragraph 6, Paragraph 7

- [3] (The Guardian) – Paragraph 3, Paragraph 7

- [4] (Reuters) – Paragraph 4

- [5] (Time) – Paragraph 4

- [7] (Reuters) – Paragraph 4

Source: Noah Wire Services

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

8

Notes:

The narrative is recent, with the earliest known publication date being November 20, 2025. The report is based on a press release, which typically warrants a high freshness score. However, similar content has appeared more than 7 days earlier, which should be flagged. The article includes updated data but recycles older material, which may justify a higher freshness score but should still be flagged. ([theguardian.com](https://www.theguardian.com/media/2025/nov/20/ofcom-at-risk-of-losing-public-trust-over-online-harms-says-liz-kendall?utm_source=openai))

Quotes check

Score:

7

Notes:

The direct quote from Liz Kendall appears in earlier material, indicating potential reuse. The wording varies slightly, but the core message remains the same. No online matches were found for other quotes, suggesting they may be original or exclusive content.

Source reliability

Score:

6

Notes:

The narrative originates from a reputable organisation, The Guardian, which strengthens its reliability. However, the report is based on a press release, which can sometimes be biased or incomplete. The presence of multiple references to other reputable outlets adds credibility.

Plausability check

Score:

8

Notes:

The claims about AI chatbots being scrutinised under the Online Safety Act are plausible and align with recent discussions on the topic. The narrative lacks supporting detail from other reputable outlets, which should be flagged. The language and tone are consistent with the region and topic, and there are no excessive or off-topic details.

Overall assessment

Verdict (FAIL, OPEN, PASS): OPEN

Confidence (LOW, MEDIUM, HIGH): MEDIUM

Summary:

The narrative is recent and based on a press release, which typically warrants a high freshness score. However, similar content has appeared more than 7 days earlier, and the report lacks supporting detail from other reputable outlets, which should be flagged. The quotes are partially reused, and the source is reputable but based on a press release. The claims are plausible, but the lack of supporting detail from other reputable outlets is a concern.