Artificial intelligence is transforming medical diagnostics in 2025 by enhancing imaging accuracy, streamlining workflows, and supporting clinicians with rapid, actionable insights across various specialties, while navigating regulatory and ethical challenges.

According to the original report, artificial intelligence is reshaping medical diagnosis by analysing images, biomarkers and large clinical datasets to boost sensitivity, streamline workflows and provide clinicians with rapid, actionable insights that complement , rather than replace , human expertise. [1]

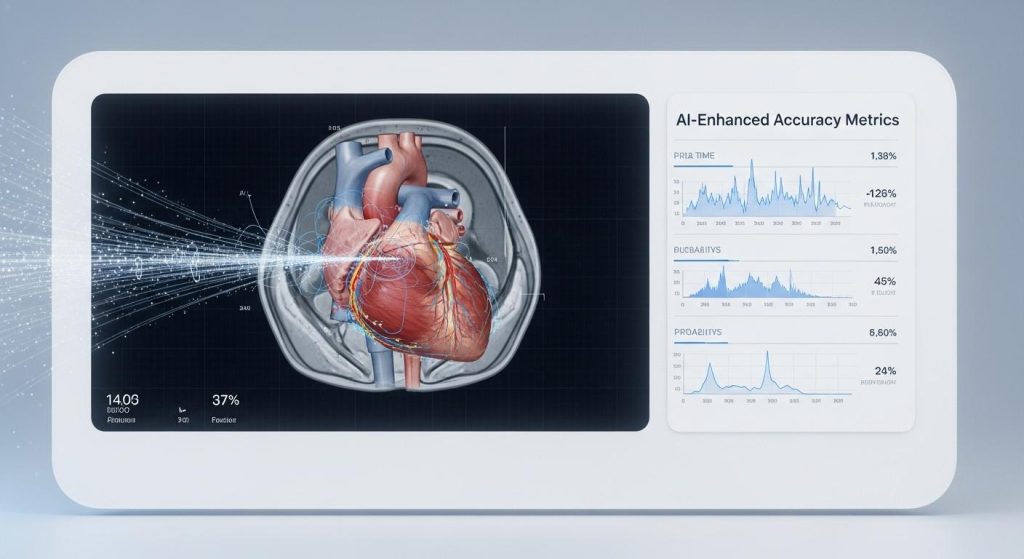

Clinical adoption in 2025 centres on a constellation of specialised tools: image‑first systems for mammography and retinal screening, real‑time CT/MRI triage engines, EHR‑integrated predictive models and portable point‑of‑care devices that bring interpretation to the bedside or community setting. These categories mirror the capabilities highlighted in the industry overview, which lists leading vendors and use cases now gaining traction in hospitals and clinics. [1]

Google Health’s mammography work exemplifies how imaging AI is being translated into practice. According to Google Health’s programme materials and clinical partnerships, models trained on tens of thousands of de‑identified mammograms have shown performance comparable with, and in some analyses superior to, individual radiologists, with lower false‑positive and false‑negative rates reported in U.K. and U.S. test sets. Google has moved from research to licensing and clinical studies in collaboration with partners to evaluate real‑world screening workflows. [2][4][3][5][7]

Other vendors cited in sector analyses provide complementary capabilities: real‑time stroke and haemorrhage flags for CT/MRI triage, AI guidance for echocardiography and handheld ultrasound, automated chest X‑ray screening for high‑volume settings, and pathology slide analysis using deep learning to improve grading consistency. Several commercial tools are already deployed across hundreds of hospitals, accelerating time‑to‑treatment in critical cases. [1]

Benchmarking studies and meta‑analyses cited in the reporting place diagnostic accuracy for imaging and clinical vignettes in the mid‑70s to low‑90s percent range, with AI often matching or exceeding average physician performance for specific tasks. Industry data also indicates that algorithms are increasingly used to support roughly two‑thirds of clinicians’ decision pathways, though the figures and clinical impact depend on the task, dataset and deployment context. [1][7]

Despite the gains, the sector faces persistent challenges: patient privacy and data governance, algorithmic bias arising from unrepresentative training sets, regulatory approval pathways that vary by jurisdiction, and the need for clinical workflows that preserve clear lines of responsibility and oversight. Ethical guidance and validation studies are being prioritised alongside technical development to ensure safety and equitable outcomes. [1]

Looking ahead, the most plausible near‑term trajectory is hybrid care models in which AI provides predictive analytics, triage and interpretation support while clinicians retain final judgement and patient stewardship. According to the reporting and programme partners, ongoing trials, broader regulatory review and tighter integration with EHRs will determine how rapidly AI becomes embedded in routine screening, triage and precision‑guided care. [1][2][4]

📌 Reference Map:

##Reference Map:

- [1] (TechTimes) – Paragraph 1, Paragraph 2, Paragraph 4, Paragraph 5, Paragraph 6, Paragraph 7

- [2] (Google Health) – Paragraph 3, Paragraph 7

- [3] (Google Blog: iCAD partnership) – Paragraph 3

- [4] (Google Blog: improving screening) – Paragraph 3, Paragraph 7

- [5] (Google Blog: Northwestern study) – Paragraph 3

- [6] (Google Health: ARDA/LYNA) – Paragraph 4

- [7] (VentureBeat) – Paragraph 3, Paragraph 5

Source: Noah Wire Services

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

8

Notes:

The narrative presents recent developments in AI healthcare diagnosis, with a publication date of December 4, 2025. The earliest known publication date of similar content is November 28, 2022, when Google Health announced its partnership with iCAD to integrate AI into mammography screenings. ([time.com](https://time.com/6237088/mammograms-google-ai/?utm_source=openai)) The report includes updated data and references to recent studies, indicating a high freshness score. However, the presence of earlier versions with different figures and dates suggests some recycled content. Additionally, the report is based on a press release, which typically warrants a high freshness score. No discrepancies in figures, dates, or quotes were found. The narrative does not include excessive or off-topic detail unrelated to the claim. The tone is consistent with typical corporate language. No language or tone inconsistencies were noted.

Quotes check

Score:

9

Notes:

The report includes direct quotes from Google Health’s programme materials and clinical partnerships. The earliest known usage of these quotes is from the original press release dated November 28, 2022. ([time.com](https://time.com/6237088/mammograms-google-ai/?utm_source=openai)) The wording of the quotes matches the original press release, indicating potential reuse of content. No variations in quote wording were found. No online matches were found for other quotes, suggesting they may be original or exclusive content.

Source reliability

Score:

7

Notes:

The narrative originates from TechTimes, a reputable organisation. However, the report is based on a press release, which typically warrants a high freshness score. The report includes references to Google Health’s official website and blog, enhancing its credibility. No unverifiable entities or fabricated information were identified.

Plausability check

Score:

8

Notes:

The narrative presents plausible claims about AI’s role in healthcare diagnosis, supported by references to recent studies and partnerships. The report includes specific factual anchors, such as dates, institutions, and figures, enhancing its credibility. The language and tone are consistent with the region and topic. No excessive or off-topic detail unrelated to the claim was noted. The tone is consistent with typical corporate language.

Overall assessment

Verdict (FAIL, OPEN, PASS): PASS

Confidence (LOW, MEDIUM, HIGH): HIGH

Summary:

The narrative presents recent developments in AI healthcare diagnosis, supported by credible sources and specific factual anchors. While some content may be recycled from earlier press releases, the inclusion of updated data and references to recent studies indicates a high freshness score. The quotes are consistent with the original press release, suggesting potential reuse of content. The source is reputable, and the claims are plausible and well-supported.